Unbreakable AI starts with unbreakable storage

From massive data lakes to real-time inference

Scality delivers flash-speed performance, end-to-end cyber resilience, and unmatched scalability for every stage of your AI pipeline.

Flexibility is the ultimate flex

DOWNLOAD NOW

Why AI breaks traditional storage

AI workloads impose a brutal combination of demands — mixing petabyte-scale unstructured data, billions of small objects, and hundreds of tools with ultra-low latency requirements and strict security constraints.

Edge-to-core data aggregation

Unpredictable growth

I/O blender effect

Mixed performance needs

Pipeline-wide data coordination

Filtering & augmentation for LLMs

Pipeline-wide privacy & security

Cyberthreat exposure

Performance vs. cost tradeoffs

The future of AI belongs to those who protect the pipeline

The AI boom has focused on GPUs, but it’s data infrastructure that defines what’s possible. As pipelines stretch from ingest to training to RAG, it’s not just about speed — it’s about moving and protecting massive datasets without friction.

Yet most storage systems only solve for a slice of the problem, and bolt-on approaches create brittle pipelines, fragmented by tiers, tools, and trust gaps.

AI infrastructure was never meant to be fast and loose. The future of AI belongs to those who protect the pipeline — not just accelerate it.

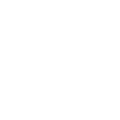

A single platform for the full AI data pipeline

From data prep and ingest to training, fine-tuning, vector embedding, and inference — Scality delivers the throughput, latency, and data control your AI stack demands.

RING XP: Flash-first storage for AI, built to scale and secure

Scality RING XP is the all-flash, high-performance edition of our proven RING platform — engineered for the scale, speed, and security demands of real-world AI. It delivers microsecond latency, exabyte scalability, and built-in cyber resilience, all in a single software-defined solution.

With native support for vector search, multi-tenant security, and intelligent tiering from flash to tape, RING XP gives you the power to move fast — without breaking your pipeline.

Performance at exabyte-scale

Dual access modes for optimized AI performance and flexibility:

- AI-optimized performance mode: Microsecond latency for 4KB object access — up to 100x faster than Amazon S3 and 20x faster than S3 Express One Zone

- Fast S3 mode: 10x higher throughput than traditional object storage, ideal for large-scale unstructured data, with full S3 API compatibility and IAM-based multi-tenancy

Patented MultiScale architecture enables limitless scale out across 10 dimensions — apps, objects, users, IO rates, throughput and more

Security by design

End-to-end data protection to secure AI’s most valuable data:

CORE5 cyber resilience: Only Scality protects data at every level of the system — from API to architecture — with true immutability and multi-layer defense against exfiltration, privilege escalation, and emerging AI-era threats

Secure multi-tenancy: Enforces strong isolation, access control, and auditability across shared infrastructure

Regulatory-grade safeguards: S3 Object Lock, encryption, and retention controls keep data intact and

Smarter data for smarter AI

Filter, prepare, and enrich your AI data at scale:

SQL-based data filtering: Seamlessly connect to Starburst, Dremio, Trino, and other engines to query and refine massive unstructured datasets

RAG-ready architecture: Directly integrates with leading vector databases to power retrieval-augmented generation (RAG) for LLMs

Streamlined AI prep workflows: Enable faster data cleansing, enrichment, and transformation without moving or duplicating data

Maximum efficiency and flexibility

Freedom to run AI workloads where and how you want, no compromise.

Unified data tiering: Optimize performance and cost with seamless lifecycle management across flash (hot), HDD (warm), and tape (cold)

Hardware freedom: Run RING XP on certified all-flash servers from HPE, Dell, Lenovo, and Supermicro with no lock-in

Smart hybrid deployment: Co-locate RING XP and standard RING on shared infrastructure to combine flash performance with cost-efficient capacity — and achieve dramatically lower TCO

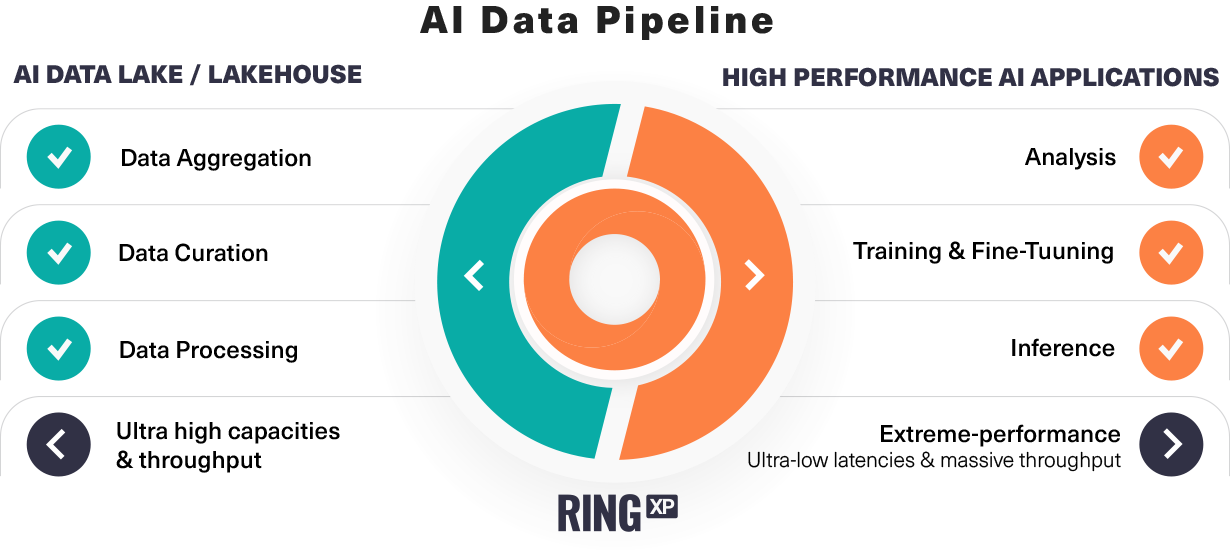

WEKA + Scality RING

Best for: Model training, fine-tuning, and inference using file-based I/O

For performance-intensive AI workloads that rely on POSIX, NFS, or SMB — especially for GPU-accelerated model training — Scality partners with WEKA® to deliver an NVIDIA-validated file system tightly integrated with RING for exabyte-scale object storage. This end-to-end solution is optimized for GPU-driven AI, ML, and HPC environments.

WEKA is purpose-built for large-scale, data-intensive AI pipelines — enabling GPUs to operate at peak efficiency by minimizing data access latency. Through NVIDIA® GPUDirect® Storage (GDS), WEKA provides a direct path between GPU memory and NVMe flash, bypassing traditional CPU bottlenecks for faster training and inference.

Together, WEKA and Scality provide a fully integrated, high-performance file-to-object storage solution optimized for AI training and inference at scale — with unmatched performance, flexibility, and efficiency.

WEKA + RING highlights:

- Ultra-high-performance file system for data-hungry AI clusters

- NVIDIA GPUDirect® certified for low-latency, direct-to-GPU transfer

- Scality RING with WEKA connector: Optimized file-to-object tiering (read + write)

- Non-disruptive scalability to exabytes

- Cost-efficient tiering with NVMe flash for hot data and HDD for cold

Learn more about the full capabilities of WEKA Data Platform at weka.io.

Connected to your AI ecosystem

Compatible with leading AI/ML tools, frameworks, and ISVs — for seamless integration from data prep to inference.

Explore our solutions

AI data lakes

Maximize the value of your data

Extreme performance

Accelerate AI model training

SeqOIA

Genomics sequencing lab advances research

![]()

Find a reseller

See our channel partners by geolocation

![]()

Request a demo

Get a personalized presentation

![]()

Contact us

Reach out to our dedicated team